Scientists have used artificial intelligence (AI) to build brand-new viruses, opening the door to AI-designed forms of life.

The viruses are different enough from existing strains to potentially qualify as new species. They are bacteriophages, which means they attack bacteria, not humans, and the authors of the study took steps to ensure their models couldn’t design viruses capable of infecting people, animals or plants.

In the study, published Thursday (Oct. 2) in the journal Science, researchers from Microsoft revealed that AI can get around safety measures that would otherwise prevent bad actors from ordering toxic molecules from supply companies, for instance.

After uncovering this vulnerability, the research team rushed to create software patches that greatly reduce the risk. This software currently requires specialized expertise and access to particular tools that most people in the public can’t use.

Combined, the new studies highlight the risk that AI could design a new lifeform or bioweapon that poses a threat to humans — potentially unleashing a pandemic, in a worst-case scenario. So far, AI doesn’t have that capability. But experts say that a future where it does isn’t so far off.

To prevent AI from posing a danger, experts say, we need to build multi-layer safety systems, with better screening tools and evolving regulations governing AI-driven biological synthesis.

The dual-use problem

At the heart of the issue with AI-designed viruses, proteins and other biological products is what’s known as the “dual-use problem.” This refers to any technology or research that could have benefits, but could also be used to intentionally cause harm.

A scientist studying infectious diseases might want to genetically modify a virus to learn what makes it more transmissable. But someone aiming to spark the next pandemic could use that same research to engineer a perfect pathogen. Research on aerosol drug delivery can help people with asthma by leading to more effective inhalers, but the designs might also be used to deliver chemical weapons.

Stanford doctoral student Sam King and his supervisor Brian Hie, an assistant professor of chemical engineering, were aware of this double-edged sword. They wanted to build brand-new bacteriophages — or “phages,” for short — that could hunt down and kill bacteria in infected patients. Their efforts were described in a preprint uploaded to the bioRxiv database in September, and they have not yet been peer reviewed.

Phages prey on bacteria, and bacteriophages that scientists have sampled from the environment and cultivated in the lab are already being tested as potential add-ons or alternatives to antibiotics. This could help solve the problem of antibiotic resistance and save lives. But phages are viruses, and some viruses are dangerous to humans, raising the theoretical possibility that the team could inadvertently create a virus that could harm people.

The researchers anticipated this risk and tried to reduce it by ensuring that their AI models were not trained on viruses that infect humans or any other eukaryotes — the domain of life that includes plants, animals, and everything that’s not a bacteria or archaea. They tested the models to make sure they couldn’t independently come up with viruses similar to those known to infect plants or animals.

With safeguards in place, they asked the AI to model its designs on a phage already widely used in laboratory studies. Anyone looking to build a deadly virus would likely have an easier time using older methods that have been around for longer, King said.

“The state of this method right now is that it’s quite challenging and requires a lot of expertise and time,” King told Live Science. “We feel that this doesn’t currently lower the barrier to any more dangerous applications.”

Centering security

But in a rapidly evolving field, such precautionary measures are being invented on the go, and it’s not yet clear what safety standards will ultimately be sufficient. Researchers say the regulations will need to balance the risks of AI-enabled biology with the benefits. What’s more, researchers will have to anticipate how AI models may weasel around the obstacles placed in front of them.

“These models are smart,” said Tina Hernandez-Boussard, a professor of medicine at the Stanford University School of Medicine, who consulted on safety for the AI models on viral sequence benchmarks used in the new preprint study. “You have to remember that these models are built to have the highest performance, so once they’re given training data, they can override safeguards.”

Thinking carefully about what to include and exclude from the AI’s training data is a foundational consideration that can head off a lot of security problems down the road, she said. In the phage study, the researchers withheld data on viruses that infect eukaryotes from the model. They also ran tests to ensure the models couldn’t independently figure out genetic sequences that would make their bacteriophages dangerous to humans — and the models didn’t.

Another thread in the AI safety net involves the translation of the AI’s design — a string of genetic instructions — into an actual protein, virus, or other functional biological product. Many leading biotech supply companies use software to ensure that their customers aren’t ordering toxic molecules, though employing this screening is voluntary.

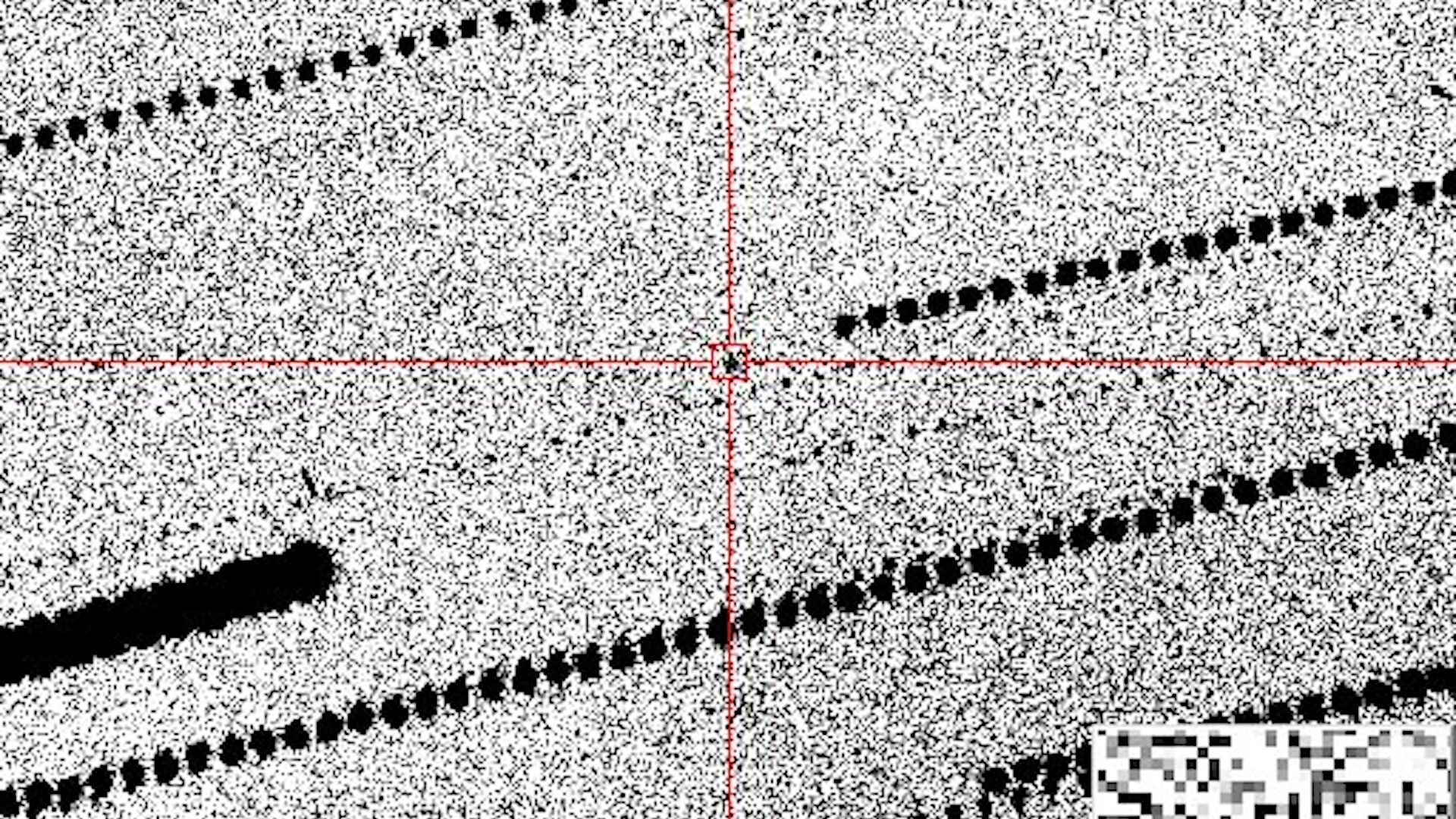

But in their new study, Microsoft researchers Eric Horvitz, the company’s chief science officer, and Bruce Wittman, a senior applied scientist, found that existing screening software could be fooled by AI designs. These programs compare genetic sequences in an order to genetic sequences known to produce toxic proteins. But AI can generate very different genetic sequences that are likely to code for the same toxic function. As such, these AI-generated sequences don’t necessarily raise a red flag to the software.

There was an obvious tension in the air among peer reviewers.

Eric Horvitz, Microsoft

The researchers borrowed a process from cybersecurity to alert trusted experts and professional organizations to this problem and launched a collaboration to patch the software. “Months later, patches were rolled out globally to strengthen biosecurity screening,” Horvitz said at a Sept. 30 press conference.

These patches reduced the risk, though across four commonly used screening tools, an average of 3% of potentially dangerous gene sequences still slipped through, Horvitz and colleagues reported. The researchers had to consider security even in publishing their research. Scientific papers are meant to be replicable, meaning other researchers have enough information to check the findings. But publishing all of the data about sequences and software could clue bad actors into ways to circumvent the security patches.

“There was an obvious tension in the air among peer reviewers about, ‘How do we do this?'” Horvitz said.

The team ultimately landed on a tiered access system in which researchers wanting to see the sensitive data will apply to the International Biosecurity and Biosafety Initiative for Science (IBBIS), which will act as a neutral third party to evaluate the request. Microsoft has created an endowment to pay for this service and to host the data.

It’s the first time that a top science journal has endorsed such a method of sharing data, said Tessa Alexanian, the technical lead at Common Mechanism, a genetic sequence screening tool provided by IBBIS. “This managed access program is an experiment and we’re very eager to evolve our approach,” she said.

What else can be done?

There is not yet much regulation around AI tools. Screenings like the ones studied in the new Science paper are voluntary. And there are devices that can build proteins right in the lab, no third party required — so a bad actor could use AI to design dangerous molecules and create them without gatekeepers.

There is, however, growing guidance around biosecurity from professional consortiums and governments alike. For example, a 2023 presidential executive order in the U.S. calls for a focus on safety, including “robust, reliable, repeatable, and standardized evaluations of AI systems” and policies and institutions to mitigate risk. The Trump Administration is working on a framework that will limit federal research and development funds for companies that don’t do safety screenings, Diggans said.

“We’ve seen more policymakers interested in adopting incentives for screening,” Alexanian said.

In the United Kingdom, a state-backed organization called the AI Security Institute aims to foster policies and standards to mitigate the risk from AI. The organization is funding research projects focused on safety and risk mitigation, including defending AI systems against misuse, defending against third-party attacks (such as injecting corrupted data into AI training systems), and searching for ways to prevent public, open-use models from being used for harmful ends.

The good news is that, as AI-designed genetic sequences become more complex, that actually gives screening tools more information to work with. That means that whole-genome designs, like King and Hie’s bacteriophages, would be fairly easy to screen for potential dangers.

“In general, synthesis screening operates better on more information than less,” Diggans said. “So at the genome scale, it’s incredibly informative.”

Microsoft is collaborating with government agencies on ways to use AI to detect AI malfeasance. For instance, Horvitz said, the company is looking for ways to sift through large amounts of sewage and air-quality data to find evidence of the manufacture of dangerous toxins, proteins or viruses. “I think we’ll see screening moving outside of that single site of nucleic acid [DNA] synthesis and across the whole ecosystem,” Alexanian said.

And while AI could theoretically design a brand-new genome for a new species of bacteria, archaea or more complex organism, there is currently no easy way for AI to translate those AI instructions into a living organism in the lab, King said. Threats from AI-designed life aren’t immediate, but they’re not impossibly far off. Given the new horizons AI is likely to reveal in the near future, there’s a need to get creative across the field, Hernandez-Boussard said.

“There’s a role for funders, for publishers, for industry, for academics,” she said, “for, really, this multidisciplinary community to require these safety evaluations.”