Quantum computing is expected to leave classical computing in the dust when it comes to solving some of the world’s most fiendishly difficult problems. The best quantum machines today have one major weakness, however — they are incredibly error-prone.

That’s why the field is racing to develop and implement quantum error-correction (QEC) schemes to alleviate the technology’s inherent unreliability. These approaches involve building redundancies into the way that information is encoded in the qubits of quantum computers, so that if a few errors creep into calculations, the entire computation isn’t derailed. Without any additional error correction, the error rate in qubits is roughly 1 in 1,000 versus 1 in 1 million million in classical computing bits.

The unusual properties of quantum mechanics make this considerably more complicated than error correction in classical systems, though. Implementing these techniques at a practical scale will also require quantum computers that are much larger than today’s leading devices.

But the field has seen significant progress in recent years, culminating in a landmark result from Google’s quantum computing team last December. The company unveiled a new quantum processor called Willow that provided the first conclusive evidence that QEC can scale up to the large device sizes needed to solve practical problems.

“Its a landmark result in that it shows for the first time that QEC actually works,” Joschka Roffe, an innovation fellow at The University of Edinburgh and author of a 2019 study into quantum error correction, told Live Science. “There’s still a long way to go, but this is kind of the first step, a proof of concept.”

Why do we need quantum error correction?

Quantum computers can harness exotic quantum phenomena such as entanglement and superposition to encode data efficiently and process calculations in parallel, rather than in sequence like classical computers. As such, the processing power increases exponentially the more qubits you add to a system for certain types of problems. But these quantum states are inherently fragile, and even the tiniest interaction with their environment can cause them to collapse.

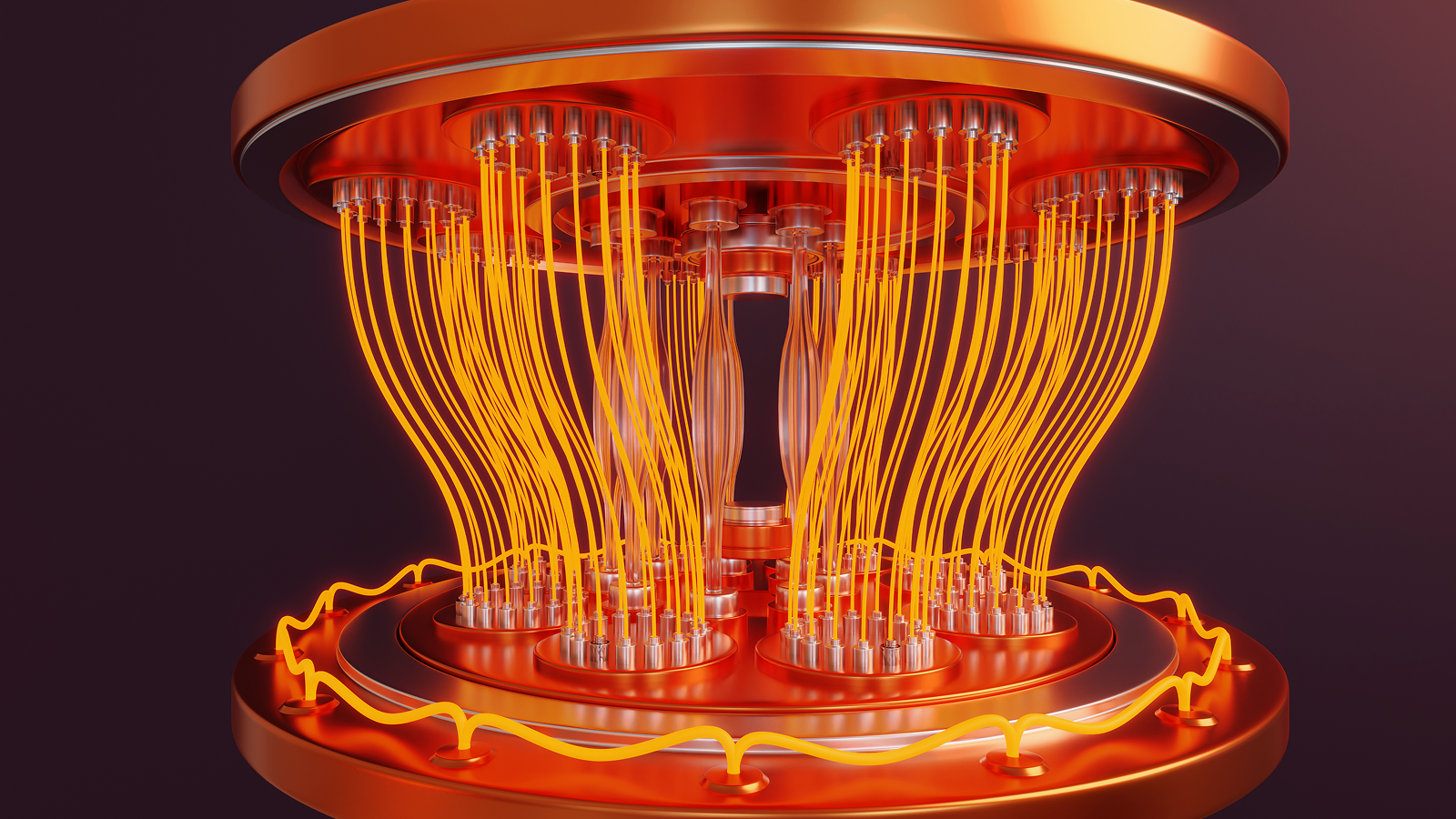

That’s why quantum computers go to great lengths to separate their qubits from external disturbances. This is normally done by keeping them at ultra-low temperatures or in a vacuum — or by encoding them into photons that interact weakly with the environment.

Related: Quantum computers will be a dream come true for hackers, risking everything from military secrets to bank information. Can we stop them?

But even then, errors can creep in, and occur at much greater rates than in classical devices. Logical operations in Google’s state-of-the-art quantum processor fail at a rate of about 1 in 100, says Roffe.

“We have to find some way of bridging this gulf so that we can actually use quantum computers to run some of the really exciting applications that we’ve proposed for them,” he said.

QEC schemes build on top of ideas developed in the 1940s for early computers, which were much more unreliable than today’s devices. Modern chips no longer need error correction, but these schemes are still widely used in digital communications systems that are more susceptible to noise.

They work by building redundancy into the information being transmitted. The simplest way to implement this is to simply send the same message multiple times, Roffe said, something known as a repetition code. Even if some copies feature errors, the receiver can work out what the message was by looking at the information that is most often repeated.

But this approach doesn’t translate neatly to the quantum world, says Roffe. The quantum states used to encode information in a quantum computer collapse if there is any interaction with the external environment, including when an attempt is made to measure them. This means that it’s impossible to create a copy of a quantum state, something known as the “no-cloning theorem.” As a result, researchers have had to come up with more elaborate schemes to build in redundancy.

What is a logical qubit and why is it so important?

The fundamental unit of information in a quantum computer is a qubit, which can be encoded into a variety of physical systems, including superconducting circuits, trapped ions, neutral atoms and photons (particles of light). These so-called “physical qubits” are inherently error-prone, but it’s possible to spread a unit of quantum information across several of them using the quantum phenomenon of entanglement.

This refers to a situation where the quantum states of two or more particles are intrinsically linked with each other. By entangling multiple physical qubits, it’s possible to encode a single shared quantum state across all of them, says Roffe, something known as a “logical qubit.” Spreading out the quantum information in this way creates redundancy, so that even if a few physical qubits experience errors, the overarching information is not lost.

However, the process of detecting and correcting any errors is complicated by the fact that you can’t directly measure the states of the physical qubits without causing them to collapse. “So you have to be a lot more clever about what you actually measure,” Dominic Williamson, a research staff member at IBM, told Live Science. “You can think of it as measuring the relationship between [the qubits] instead of measuring them individually.”

This is done using a combination of “data qubits” that encode the quantum information, and “ancilla qubits” that are responsible for detecting errors in these qubits, says Williamson. Each ancilla qubit interacts with a group of data qubits to check if the sum of their values is odd or even without directly measuring their individual states.

If an error has occurred and the value of one of the data qubits has changed, the result of this test will flip, indicating that an error has occurred in that group. Classical algorithms are used to analyze measurements from multiple ancilla qubits to pinpoint the location of the fault. Once this is known, an operation can be performed on the logical qubit to fix the error.

What are the main error-correction approaches?

While all QEC schemes share this process, the specifics can vary considerably. The most widely-studied family of techniques are known as “surface codes,” which spread a logical qubit over a 2D grid of data qubits interspersed with ancilla qubits. Surface codes are well-suited to the superconducting circuit-based quantum computers being developed by Google and IBM, whose physical qubits are arranged in exactly this kind of grid.

But each ancilla qubit can only interact with the data qubits directly neighboring it, which is easy to engineer but relatively inefficient, Williamson said. It’s predicted that using this approach, each logical qubit will require roughly 1,000 physical ones, he adds.

This has led to growing interest in a family of QEC schemes known as low-density parity check (LDPC) codes, Williamson said. These rely on longer-range interactions between qubits, which could significantly reduce the total number required. The only problem is that physically connecting qubits over larger distances is challenging, although it is simpler for technologies like neutral atoms and trapped ions, in which the physical qubits can be physically moved around.

A prerequisite for getting any of these schemes working, though, says Roffe, is slashing the error rate of the individual qubits below a crucial threshold. If the underlying hardware is too unreliable, errors will accumulate faster than the error correction scheme can resolve them, no matter how many qubits you add to the system. In contrast, if the error rate is low enough, adding more qubits to the system can lead to an exponential improvement in error suppression.

The recent Google paper has provided the first convincing evidence that this is within reach. In a series of experiments, the researchers used their 105-qubit Willow chip to run a surface code on increasingly large arrays of qubits. They showed that each time they scaled up the number of qubits, the error rate halved.

“We want to be able to be able to suppress the error rate by a factor of a trillion or something so there’s still a long way to go,” Roffe told Live Science. “But hopefully this paves the way for larger surface codes that actually meaningfully suppress the error rates to the point where we can do something useful.”