There are many debates around artificial intelligence (AI) given the explosion in its capabilities, from worrying whether it will take our jobs to questioning if we can trust it in the first place.

But the AI being used today is not the AI of the future. Scientists are increasingly convinced we are on an express train to building artificial general intelligence (AGI) — an advanced type of AI that can reason like humans, perform better than us in different domains, and even improve its own code and make itself more powerful.

Experts call this moment the singularity. Some scientists say it could happen as early as next year, but most agree there’s a strong chance that we will build AGI by 2040.

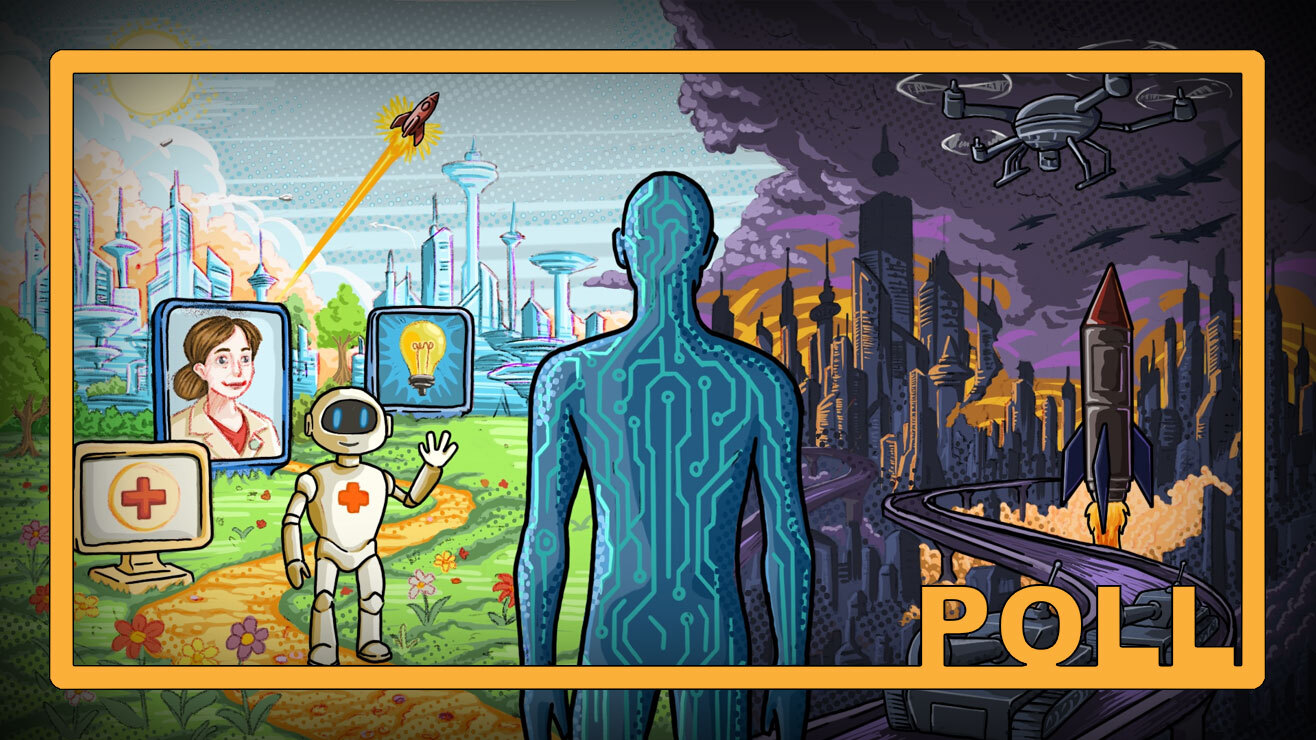

But what then? Birthing an AI that’s smarter than humans may bring countless benefits — including rapidly doing new science and making fresh discoveries. But an AI that can build increasingly powerful versions of itself may also not be such great news if its interests do not align with humanity’s. That’s where artificial super intelligence (ASI) comes into play — and the potential risks associated with pursuing something far more capable than us.

AI development, as experts have told Live Science, is entering “an unprecedented regime”. So, should we stop it before it becomes powerful enough to potentially snuff us out at the snap of its fingers? Let us know in the poll below, and be sure to tell us why you voted the way you did in the comments section.

—AI is entering an ‘unprecedented regime.’ Should we stop it — and can we — before it destroys us?

—There are 32 different ways AI can go rogue, scientists say — from hallucinating answers to a complete misalignment with humanity

—Threaten an AI chatbot and it will lie, cheat and ‘let you die’ in an effort to stop you, study warns